Human-AI Masterclass

A training program to help build the human judgment, hands-on Human-AI collaboration skills and enterprise AI tool fluency that your teams need to reinvent their work in the age of AI.

What it offers

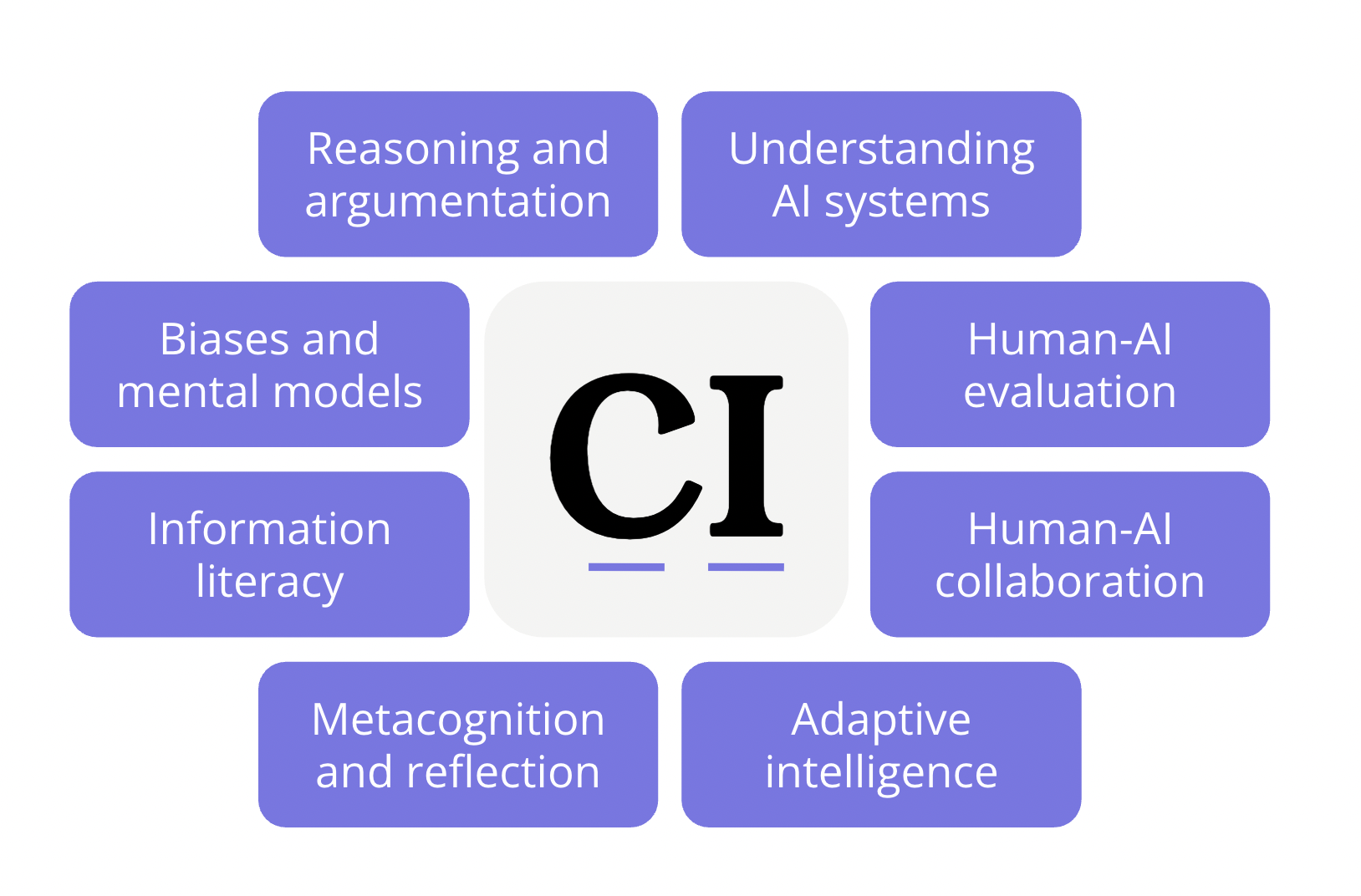

A practical masterclass training program that develops Critical Intelligence — the judgment, reasoning, and bias-awareness skills every employee needs — and pairs it with hands-on proficiency using real enterprise AI tools to augment work, design effective Human–AI workflows — providing real results through a structured mini-project.

Most teams are told to “use AI,” but not taught how to work with it: which tools to choose, how to evaluate outputs, what collaboration mode to adopt, or how their collaboration style shapes outcomes.

This masterclass gives participants deep cognitive foundations and real applied experience using 3–5 enterprise-grade AI tools (e.g., Microsoft Copilot - based on what you want to use). Through guided exercises and a live 3–6 hour mini-project, cross-functional teams learn how to design, test, and refine Human–AI collaboration patterns that elevate their work instead of degrading it.

What you’ll learn

By the end, participants don’t just understand AI and how to work with it. They’ve practiced working with it, stress-tested their instincts, learned where it helps and where it misleads, and discovered how their own collaboration style drives quality, speed, and creativity.

Critical Intelligence foundations. How to identify the right questions to answer, evaluate arguments and outputs, detect bias, and maintain human judgment.

Human–AI collaboration modes. The four operating modes (Delegate, Collaborate, Supervise, Generate) and when to use each for different categories of work.

Practical tool fluency. Exposure to 3–5 enterprise AI tools and hands-on instruction on how to use them effectively, interpret their outputs, and understand their limitations.

Choosing the right tool for the task. A decision framework for mapping tools to workflows, complexity levels, risk profiles, and required evidence standards.

Human-AI evaluation. Multi-dimensional rubrics to evaluate clarity, evidence quality, reasoning soundness, and spot bias (and have AI evaluate your own work too!).

Team-based mini project. A structured 3–6 hour simulation where participants form teams, tackle a real business challenge, and use Human-AI collaboration to solve it.

Collaboration-focused judging. Output quality is reviewed — but the primary evaluation is how well teams collaborated with AI, the reasoning behind their approach, and how effectively they used tools.

How it works

The Human-AI Masterclass is delivered in two parts, often over one or two days:

Part 1:

Human-AI understanding, and mental models

Foundational modules including understanding how AI works, what tools to use for what, critical thinking, information literacy, AI evaluation, bias awareness, and human–AI collaboration modes — with rapid-fire exercises to strengthen thinking.

Part 2:

Applied Human–AI Collaboration

Participants work through hands-on sessions using multiple enterprise tools, learn how to choose between tools, and complete a structured team mini-project on a real business challenge. Teams design a workflow, distribute tasks between humans and AI and present their process and outcomes.

Participants leave with a capability plan, rubric templates to apply in their daily work, recommended tools, and a clear and real understanding of how to work in a Human–AI team on a daily basis.

Get in touch.

If you’d like to speak to a real human about our services, please give us your contact details.